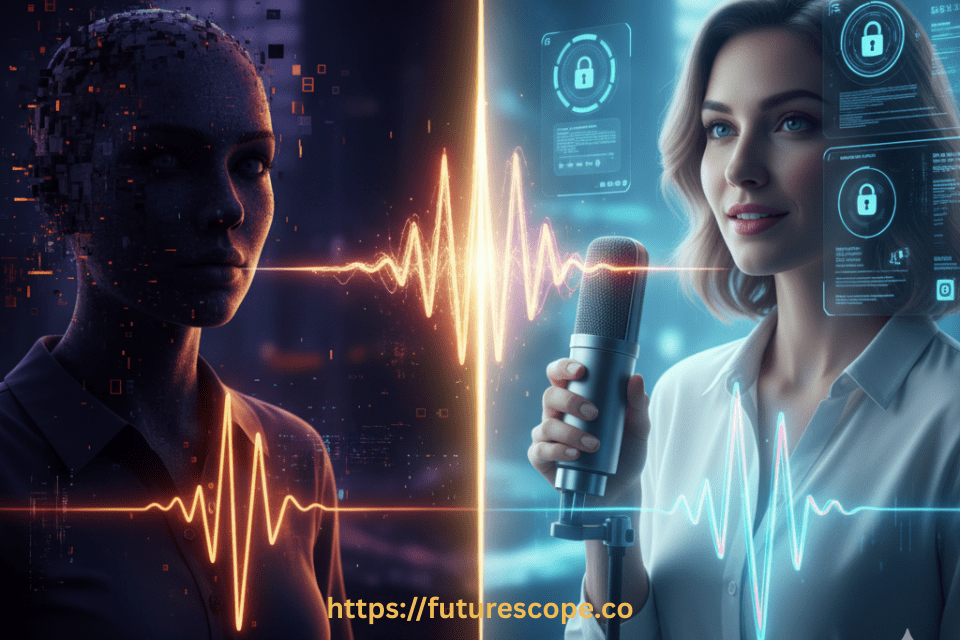

Deepfake AI has rapidly evolved from experimental technology into a powerful cybercrime weapon. With only a few seconds of recorded audio, attackers can now generate hyper-realistic synthetic voices capable of impersonating executives, customers, or trusted individuals. According to global cybersecurity reports, deepfake-enabled fraud attempts have increased by more than 3,000% since 2022, with voice cloning emerging as one of the fastest-growing attack vectors. Unlike traditional scams, voice deepfakes exploit human trust, bypassing skepticism through familiar tone, accent, and speech patterns. As organizations increasingly rely on voice interfaces—call centers, voice authentication, smart assistants, and remote identity verification—the urgency for advanced voice security has never been higher.

Understanding the Modern Deepfake Attack Surface

The modern attack surface has expanded far beyond email phishing and malware. Voice-driven systems now represent high-value targets, including customer support lines, banking IVRs, voice-based MFA, VoIP platforms, and remote work communication tools. Attackers exploit publicly available audio from social media, webinars, podcasts, or voicemail greetings to train AI voice models. These models are then used in real-time impersonation attacks, where fraudsters place live calls that sound indistinguishable from legitimate users. This creates a dangerous blend of social engineering and AI automation, allowing attackers to manipulate employees, authorize financial transactions, or reset account credentials without triggering traditional security controls.

What Is Voice Security and Why It Matters?

Voice security refers to a set of technologies designed to verify, analyze, and protect voice interactions from fraud, impersonation, and synthetic manipulation. Unlike passwords or knowledge-based authentication, voice security relies on biometric and behavioral traits that are extremely difficult to replicate perfectly, even with advanced AI. Modern voice security systems analyze hundreds of micro-signals within speech—such as frequency stability, vocal tract resonance, breathing patterns, and timing inconsistencies—to detect whether a voice is human, live, and authentic. This makes voice security a powerful defense layer against deepfake AI, especially in environments where voice is a primary authentication factor.

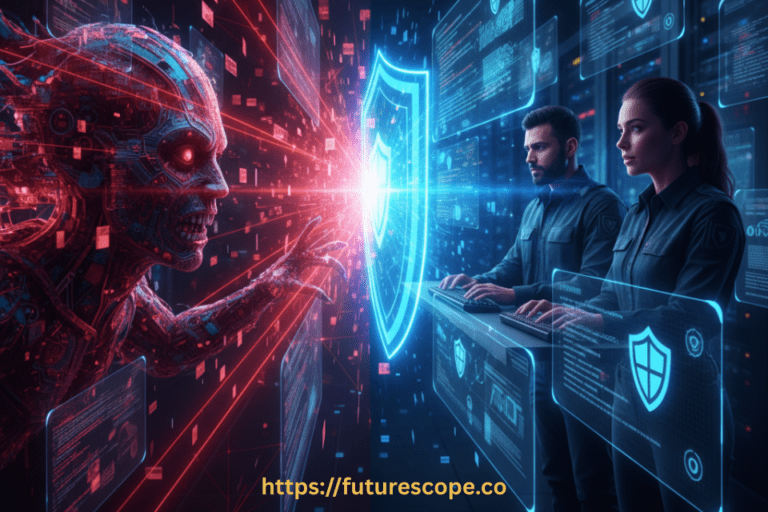

Key Features of Voice Security Solutions Against Deepfakes

Advanced voice security platforms combine multiple detection layers to combat deepfake attacks effectively. Voice biometrics identify unique speaker characteristics, while liveness detection confirms that audio is generated by a real human in real time, not replayed or synthesized. AI-based deepfake detection engines analyze spectral artifacts, phase distortions, and neural synthesis fingerprints commonly present in cloned audio. Many enterprise solutions also offer continuous authentication, monitoring the voice throughout a conversation instead of relying on a single verification moment. When combined with behavioral analytics, these features can reduce voice fraud attempts by up to 90%, according to industry benchmarks.

Key Statistics Highlighting the Deepfake Voice Threat

The scale of the problem underscores why voice security is critical. Studies show that over 70% of people cannot reliably distinguish AI-generated voices from real ones, even when warned in advance. Financial institutions report millions of dollars in losses annually from voice-based social engineering scams, including CEO fraud and account takeovers. Additionally, synthetic audio files are growing exponentially, with millions of new deepfake voice samples circulating each year. These statistics reveal a clear reality: human judgment alone is no longer sufficient to defend against AI-driven voice attacks.

Key Statistics

- Studies show humans struggle to distinguish synthetic voices, with accuracy rates often below 70%.

- By 2026, voice cloning attacks are expected to rise by over 200%, driven by cheap and accessible AI tools.

- Financial institutions report that voice fraud attempts increased 350% between 2023–2025, highlighting the urgency of robust defenses

How Voice Security Protects Against Deepfake Attacks?

Voice security protects organizations by shifting trust away from what humans hear and toward machine-level verification. When a call or voice command is initiated, the system evaluates whether the voice matches the enrolled biometric profile and whether it exhibits characteristics of live human speech. If anomalies are detected—such as unnatural pitch transitions or spectral inconsistencies—the interaction can be flagged, challenged, or blocked instantly. By integrating voice security with multi-factor authentication and risk-based decision engines, organizations can stop deepfake attacks before sensitive actions are approved, significantly reducing fraud exposure.

Tools Powering Modern Voice Security

Modern voice security solutions rely on a combination of machine learning, signal processing, and neural network analysis. Popular tools include voice biometric engines, real-time audio forensics platforms, and deepfake detection APIs that integrate directly into call centers and authentication workflows. Many solutions now support cloud-based deployment, enabling real-time protection across global operations. Some platforms also incorporate threat intelligence feeds, continuously updating detection models as new deepfake techniques emerge. These tools ensure that defenses evolve alongside attackers, rather than becoming obsolete.

Tools for Deepfake Attack Protection

Organizations are deploying specialized tools to counter deepfake threats:

- AI-Powered Detection Systems: Identify synthetic audio patterns invisible to human ears.

- Voice Watermarking: Embeds hidden signals in legitimate recordings to verify authenticity.

- Behavioral Biometrics: Tracks unique speech habits, pauses, and emotional tones.

- Threat Intelligence Platforms: Monitor emerging deepfake attack vectors across industries

Best Practices for Deepfake Voice Attack Prevention

To maximize protection, organizations should adopt a layered security approach. Voice authentication should never operate alone; it must be paired with device intelligence, behavioral analytics, and contextual risk assessment. Implementing step-up verification for high-risk transactions, such as callbacks or secondary approval channels, adds another safeguard. Regular employee training is essential, as staff must understand how AI-powered impersonation works and why traditional “trust your ears” instincts are no longer reliable. Finally, organizations should continuously test and update their voice security systems to adapt to the rapidly evolving deepfake landscape.

The Future of Voice Security in an AI-Driven World

As generative AI continues to advance, deepfake attacks will become cheaper, faster, and more convincing. Voice security is no longer optional—it is a strategic necessity for any organization that relies on voice interactions. By combining AI-driven detection, biometric verification, and strong operational best practices, voice security provides a scalable and resilient defense against one of the most dangerous threats of the digital age. Organizations that invest early will not only prevent fraud but also build trust, protect brand reputation, and future-proof their security infrastructure against the next generation of AI attacks.

Final Thoughts

Voice security is no longer optional—it is a frontline defense against deepfake AI. By combining advanced detection technologies, layered authentication, and proactive education, organizations and individuals can safeguard digital trust in an era where synthetic voices are increasingly weaponized.

Would you like me to also create a comparison table of top voice security tools (e.g., Pindrop, Nuance, Veridas) with their strengths and weaknesses? That could make this blog post even more actionable for readers.

Frequently Asked Questions on Voice Security & Deepfake AI

Q1: What is deepfake voice technology?

Deepfake voice technology uses AI to clone or synthesize human voices, often with just a few seconds of audio, making it nearly indistinguishable from real speech.

Q2: How can voice security protect against deepfake attacks?

Voice security employs liveness detection, multifactor authentication, and AI‑powered fraud detection to identify synthetic voices and prevent impersonation.

Q3: What industries are most at risk from deepfake voice attacks?

Financial institutions, call centers, government agencies, and enterprises with sensitive communications are prime targets due to reliance on voice authentication.

Q4: What are the best practices to defend against deepfake AI?

Organizations should adopt layered security, educate users, implement safe word protocols, and regularly update detection tools to stay ahead of evolving threats.

Q5: Are there tools available to detect deepfake voices?

Yes, tools such as AI‑powered detection systems, voice watermarking, and behavioral biometrics are increasingly used to identify and block synthetic audio.