Artificial intelligence has transformed cybersecurity on both sides of the battlefield. While defenders use AI to detect and respond to threats faster, attackers now leverage AI-generated malware, deepfake content, automated phishing, and synthetic identities to scale attacks with unprecedented speed and realism. Industry research shows that AI-powered cyberattacks have increased by more than 250% over the past two years, with deepfake impersonation, automated social engineering, and adaptive malware becoming mainstream tools for cybercriminals. Unlike traditional threats, AI-generated attacks continuously learn, evolve, and bypass static defenses, forcing cybersecurity experts to rethink how protection is designed and deployed.

Understanding the Modern AI-Driven Attack Surface

The modern attack surface has expanded dramatically due to cloud adoption, remote work, APIs, IoT devices, and AI-powered business applications. Attackers now exploit voice systems, video conferencing platforms, identity verification workflows, customer support channels, and software supply chains using AI-generated artifacts. Deepfake audio and video are increasingly used to impersonate executives or trusted partners, while generative AI produces highly personalized phishing messages that outperform human-written scams. This convergence of AI and digital infrastructure creates a dynamic, constantly shifting attack surface, where threats emerge faster than traditional rule-based security systems can adapt.

AI-generated threats expand the attack surface in unprecedented ways:

- Deepfake Impersonation: Fraudsters use synthetic voices and videos to impersonate executives, politicians, or family members.

- AI-Powered Phishing: Large language models generate convincing phishing emails and scripts at scale.

- Automated Reconnaissance: AI scans networks at speeds of 36,000 probes per second, identifying vulnerabilities faster than humans can patch them.

- Trust Exploitation: Deepfakes undermine the most fundamental element of communication—trust—making it harder to distinguish legitimate interactions.

Key Statistics

- AI-powered cyberattacks surged 72% year-over-year in 2025, with 87% of organizations reporting AI-driven incidents.

- Voice phishing attacks rose 442% in 2024, fueled by AI-generated impersonation tactics.

- The average cost of a data breach reached $4.88 million in 2024, with AI-driven attacks accounting for 16% of incidents.

Why Traditional Cybersecurity Defenses Are No Longer Enough?

Legacy security tools rely heavily on static signatures, predefined rules, and historical indicators of compromise, which are ineffective against AI-generated threats that mutate in real time. AI-powered phishing emails, for example, can dynamically change language, tone, and context to evade spam filters. Similarly, deepfake attacks exploit human trust rather than software vulnerabilities, making employees and executives prime targets. Studies indicate that over 70% of professionals struggle to identify AI-generated impersonation attempts, highlighting the limitations of awareness training alone. This reality has pushed cybersecurity experts to adopt AI-native defense strategies capable of operating at machine speed.

How Cybersecurity Experts Use AI to Fight AI Threats?

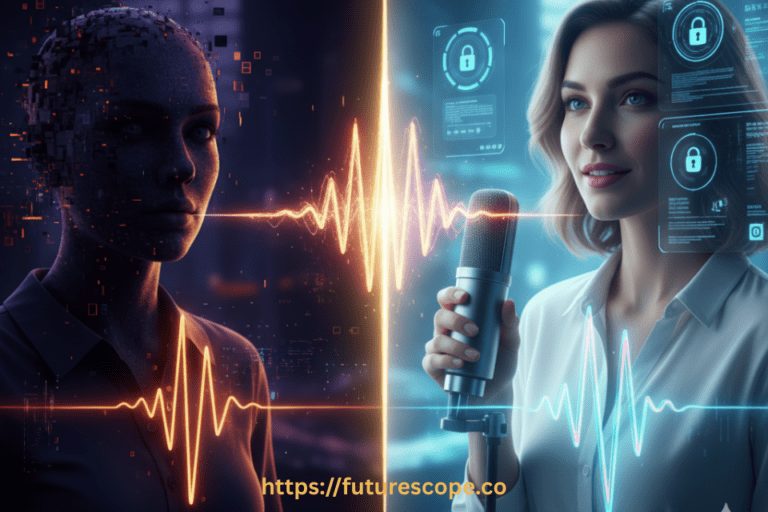

To counter AI-generated attacks, cybersecurity teams are increasingly deploying machine learning and behavioral analytics across detection and response workflows. AI-powered security platforms analyze massive volumes of data to identify anomalies in network traffic, user behavior, and system activity that indicate malicious intent. Behavior-based detection enables systems to spot threats even when malware signatures are unknown. In the context of deepfake protection, AI models analyze subtle inconsistencies in audio, video, and text—such as spectral artifacts, unnatural speech patterns, or visual mismatches—to distinguish synthetic content from real human interactions.

Deepfake Attack Protection and Identity Defense

Deepfake attacks represent one of the most dangerous AI-driven threats, particularly for enterprises, financial institutions, and government organizations. Cybersecurity experts combat these attacks using multimodal verification, combining voice biometrics, facial recognition, liveness detection, and contextual risk analysis. Real-time deepfake detection systems inspect audio and video streams for AI-generated fingerprints, while continuous authentication ensures identity remains valid throughout a session. Organizations deploying these defenses report fraud reduction rates of up to 90% in voice and impersonation-based attacks, demonstrating the effectiveness of layered AI-driven protection.

Key Security Features Used Against AI-Generated Threats

Modern cybersecurity platforms integrate several critical features to combat AI threats at scale. These include real-time anomaly detection, adaptive risk scoring, automated incident response, and continuous learning models that evolve with attacker behavior. Advanced zero-trust architectures limit access based on identity, context, and behavior rather than static credentials. Additionally, AI-powered threat intelligence platforms aggregate global attack data to identify emerging tactics before they spread widely. These features allow security teams to move from reactive defense to predictive threat prevention.

Tools Powering AI-Driven Cyber Defense

Cybersecurity experts rely on a growing ecosystem of AI-powered tools to defend against generative threats. These include AI-enhanced SIEM and SOAR platforms, endpoint detection and response (EDR), extended detection and response (XDR), deepfake detection engines, and identity threat detection systems. Cloud-native security tools provide real-time visibility across distributed environments, while automated remediation engines contain threats within seconds. Many organizations also deploy AI governance and monitoring tools to secure their own internal AI systems against misuse or data leakage.

Best Practices for Defending Against AI-Generated Cyber Attacks

To effectively mitigate AI-driven threats, cybersecurity experts recommend a layered and adaptive security strategy. Organizations should combine AI-powered detection with zero-trust principles, continuous authentication, and least-privilege access controls. High-risk actions should trigger step-up verification or out-of-band confirmation. Employee training must evolve beyond basic phishing awareness to include AI-enabled social engineering scenarios, such as deepfake calls or synthetic executive requests. Regular red-teaming, AI threat modeling, and continuous system updates ensure defenses remain effective as attack techniques evolve.

Features of Modern Cyber Defense

Cybersecurity experts are integrating advanced features to combat AI-generated threats:

- Deepfake Detection Tools: AI systems trained to spot inconsistencies in speech, facial movements, and audio signals.

- Zero Trust Architecture: Continuous verification of users and devices, assuming no interaction is inherently safe.

- Behavioral Biometrics: Tracking unique speech patterns, typing rhythms, and user behaviors to detect anomalies.

- AI-Powered Threat Intelligence: Real-time monitoring of attack trends across industries.

The Future of Cybersecurity in an AI-Powered Threat Landscape

As generative AI continues to advance, cyber threats will become more autonomous, targeted, and difficult to detect. Cybersecurity experts are responding by embedding AI at every layer of defense—from identity and endpoints to networks and applications. The future of cybersecurity lies in AI-versus-AI defense models, where intelligent systems monitor, adapt, and respond faster than human attackers can operate. Organizations that invest in AI-driven cybersecurity today will be better positioned to protect digital assets, maintain trust, and stay resilient in an increasingly automated threat environment.

Final Thoughts

AI-generated threats represent a new era of cyber risk, where trust itself is under attack. Cybersecurity experts are responding with AI-powered detection, zero trust frameworks, and proactive education to safeguard organizations and individuals. The fight against deepfake and AI-driven attacks is ongoing, but with layered defenses and vigilance, digital trust can be preserved.

Would you like me to also create a comparison table of leading deepfake detection tools (e.g., Microsoft Video Authenticator, Pindrop, Reality Defender) to make this article more actionable for readers?

Frequently Asked Questions on AI-Generated Cyber Threats

Q1: What are AI-generated cyber threats?

AI-generated threats include deepfake impersonations, AI-powered phishing, automated malware, and synthetic media attacks that exploit trust and identity.

Q2: How are cybersecurity experts fighting deepfake attacks?

Experts use deepfake detection tools, voice and video watermarking, behavioral biometrics, and zero trust frameworks to identify and block synthetic content.

Q3: Why is the modern attack surface more vulnerable with AI?

AI expands the attack surface by enabling large-scale phishing, rapid vulnerability scanning, and realistic impersonations that bypass traditional defenses.

Q4: What tools are available to detect AI-generated threats?

Tools include synthetic media detection platforms, extended detection & response (XDR), AI-powered threat intelligence, and secure communication protocols.

Q5: What best practices help organizations defend against AI threats?

Best practices include layered security, employee education, safe word protocols, regular updates to detection tools, and cross-industry collaboration.